Method

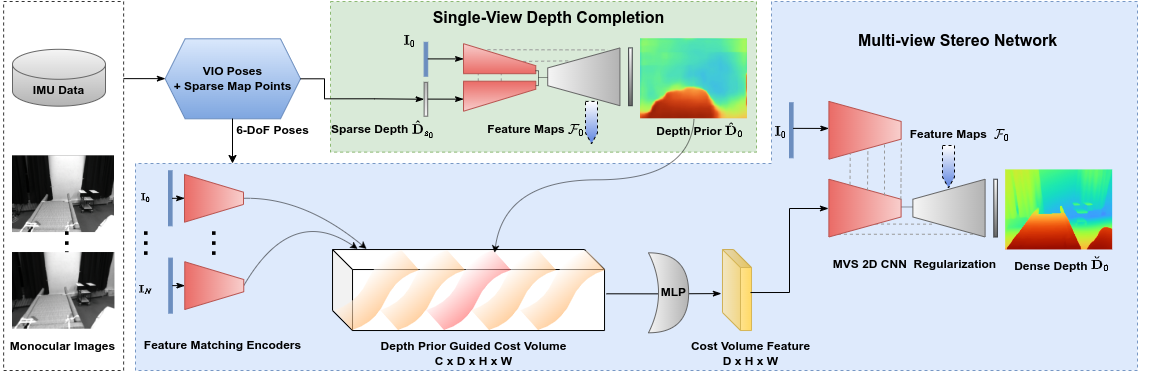

System overview of SimpleMapping. VIO takes input of monocular images and IMU data to estimate 6-DoF camera poses and generate a local map containing noisy 3D sparse points. Dense mapping process first performs the single-view depth completion with VIO sparse depth map $\hat{\mathbf{D}_{s_0}}$ and the reference image frame $\mathbf{I}_0$, then adopts multi-view stereo (MVS) network to infer a high-quality dense depth map for $\mathbf{I}_0$. The depth prior $\hat{\mathbf{D}}_0$ and hierarchical deep feature maps $\mathbf{\mathcal{F}}_0$ from the single-view depth completion contribute to the cost volume formulation and 2D CNN cost volume regularization in the MVS. The high-quality dense depth prediction from the MVS, $\breve{\mathbf{D}}_0$, is then fused into a global TSDF grid map for a coherent dense reconstruction.

Experimental Results

(Baseline denotes the same VIO frontend integrated with SimpleRecon.)

EuRoC Dataset

Here we present 3D reconstruction on EuRoC V102 and V203 scenarios. SimpleMapping yields consistently better detailed reconstruction even in challenging scenarios.

ETH3D Dataset and Self-collected Dataset

As can be observed, both the baseline system and TANDEM suffer from inconsistent geometry and noticeable noise. SimpleMapping surpasses the other methods significantly in terms of dense mapping accuracy and robustness.

ScanNet Dataset

We showcase the comparable reconstruction performance of SimpleMapping utilizing only a monocular camera setup without IMU, against a state-of-the-art RGB-D dense SLAM method with neural implicit representation, Vox-Fusion. Here is the results on ScanNet test set. Vox-Fusion tends to produce over-smoothed geometries and experience drift during long-time tracking, resulting in inconsistent reconstruction, as observed in Scene0787.

Runtime Efficiency

We present the averaged per-keyframe runtime for each module and per-frame runtime for the whole process evaluated on EuRoC Dataset. SimpleMapping is able to ensure real-time performance, only requiring 55 ms to process one frame.